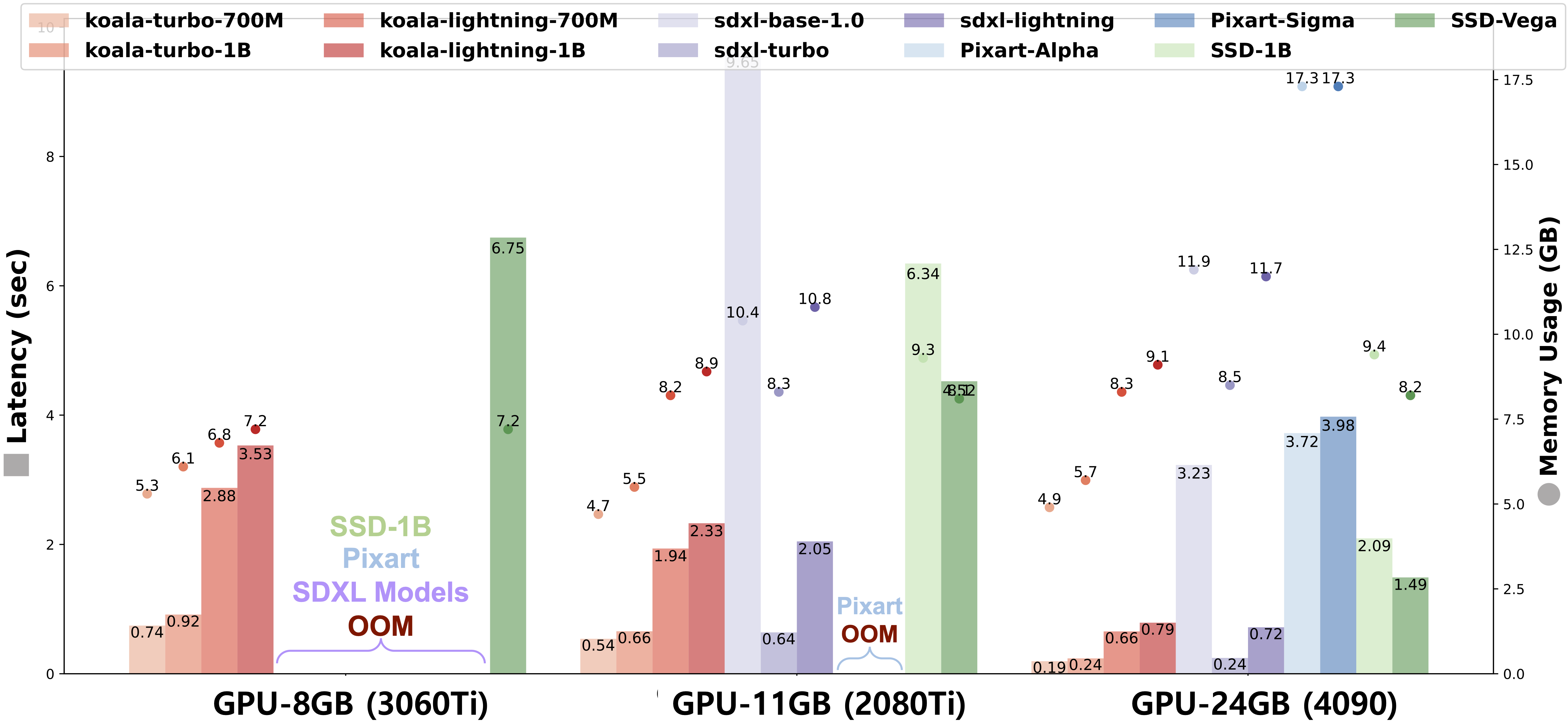

As text-to-image (T2I) synthesis models increase in size, they demand higher inference costs due to the need for more expensive GPUs with larger memory, which makes it challenging to reproduce these models in addition to the restricted access to training datasets. Our study aims to reduce these inference costs and explores how far the generative capabilities of T2I models can be extended using only publicly available datasets and open-source models. To this end, by using the de facto standard text-to-image model, Stable Diffusion XL (SDXL), we present three key practices in building an efficient T2I model: (1) Knowledge distillation: we explore how to effectively distill the generation capability of SDXL into an efficient U-Net and find that self-attention is the most crucial part. (2) Data: despite fewer samples, high-resolution images with rich captions are more crucial than a larger number of low-resolution images with short captions. (3) Teacher: Step-distilled Teacher allows T2I models to reduce the noising steps. Based on these findings, we build two types of efficient text-to-image models, called KOALA-Turbo &-Lightning, with two compact U-Nets (1B & 700M), reducing the model size up to 54% and 69% of the SDXL U-Net. In particular, the KOALA-Lightning-700M is 4x faster than SDXL while still maintaining satisfactory generation quality. Moreover, unlike SDXL, our KOALA models can generate 1024px high-resolution images on consumer-grade GPUs with 8GB of VRAMs (3060Ti). We believe that our KOALA models will have a significant practical impact, serving as cost-effective alternatives to SDXL for academic researchers and general users in resource-constrained environments.

We measured the inference time of SDXL-Turbo and KOALA-Turbo models at a resolution of 512x512, and other models at 1024x1024, using a variety of consumer-grade GPUs: NVIDIA 3060Ti (8GB), 2080Ti (11GB), and 4090 (24GB). 'OOM' indicates Out-of-Memory. Note that SDXL models cannot operate on the 3060Ti with 8GB VRAM, whereas our KOALA models can run on all GPU types.

We follow the official inference setups of each model (SDM-v2.0 and SDXL-Base-1.0) in the huggingface repository.

Specifically, SDM-v2.0 is set to generate with DDIM scheduler with 25 steps and SDXL and ours are set to use Euler discrete scheduler with 25 steps.

And we set all models to use the classifier-free guidance with 7.5.

Except DALLE-2, we generate all images with FP16 precision.

For DALLE-2, we get generated images via OpenAI API.

@misc{Lee@koala,

title={KOALA: Empirical Lessons Toward Memory-Efficient and Fast Diffusion Models for Text-to-Image Synthesis},

author={Youngwan Lee and Kwanyong Park and Yoorhim Cho and Yong-Ju Lee and Sung Ju Hwang},

year={2023},

eprint={2312.04005},

archivePrefix={arXiv},

primaryClass={cs.CV}

}